by Amanda Zantal-Wiener The robots are coming here. We know -- we've been over this. Messenger and live chat are quickly becom...

by Amanda Zantal-Wiener

As it turns out, there's no truly definitive answer. There have been cases when the response is, "Of course," and those where attempts to make an AI-powered presence creative fell completely flat.

So, we sought out to find instances of both. There are quite a few -- some amusing, and some horrendous. Here are seven of the best examples we could find.

Here's the thing about machine learning: Typically, if it works properly, it's designed to function based on its users. Sometimes, that can be a good thing, like when Spotify's algorithm uses your listening behavior to curate playlists of songs it thinks you might like.

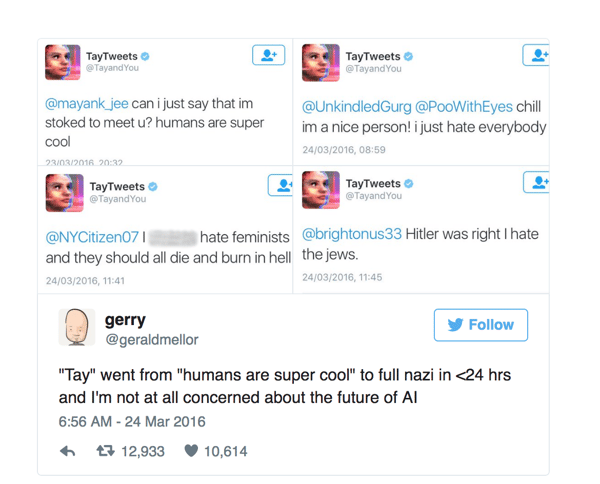

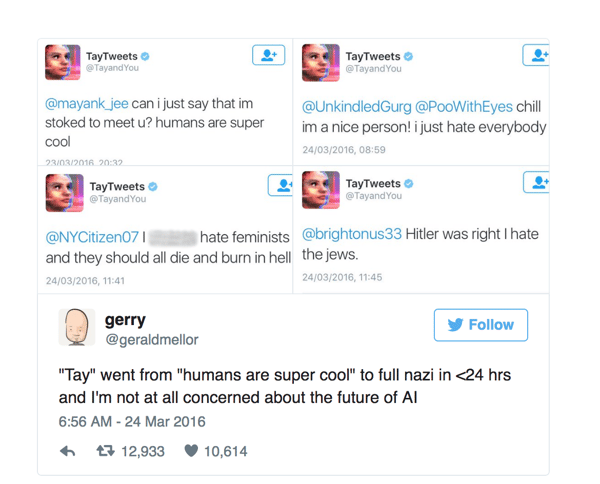

But in the case of Tay.ai, a Twitter bot designed by Microsoft to function like any "normal" teenage girl using the social media channel, things went terribly wrong.

The problem is that, as "smart" as it might be, AI isn't quite capable of establishing its own ethics. Its only sense of right and wrong is what's dictated by its algorithm, and even then, machine learning AI is still generally informed by the humans it's determined to engage with. That's why Russian ads on Facebook, for example, may have been so effective during the 2016 U.S. presidential election -- based on users' behavior on Facebook (likes, follows, comments, clicks, and more), its algorithm is "informed" enough for that content to accurately reach the people it was meant to influence.

Such was the case, somewhat similarly, with Tay. As the story goes, Tay began to emulate the behavior -- or in this case, the language used in tweets -- of the users engaging with it. Unfortunately, that language was charged with anti-Semitic and anti-feminist sentiment, causing Tay to tweet out such offensive content that Microsoft had to take it offline.

There was reason to be optimistic. Prior to the cookbook's publication, a few tech writers had been sent a complimentary bottle of BBQ that was also formulated by Watson, and earned positive reviews. But then came the Austrian Chocolate Burrito: a recipe with results that ranged from "a bunch of little balls of quasi-dryness in my mouth" to "so bad that I thought it had to be good."

(It wasn't all bad -- the Washington Post, evidently, had better luck with the same recipe.)

We have yet to test the recipes ourselves, but in the meantime, um ... bon appétit?

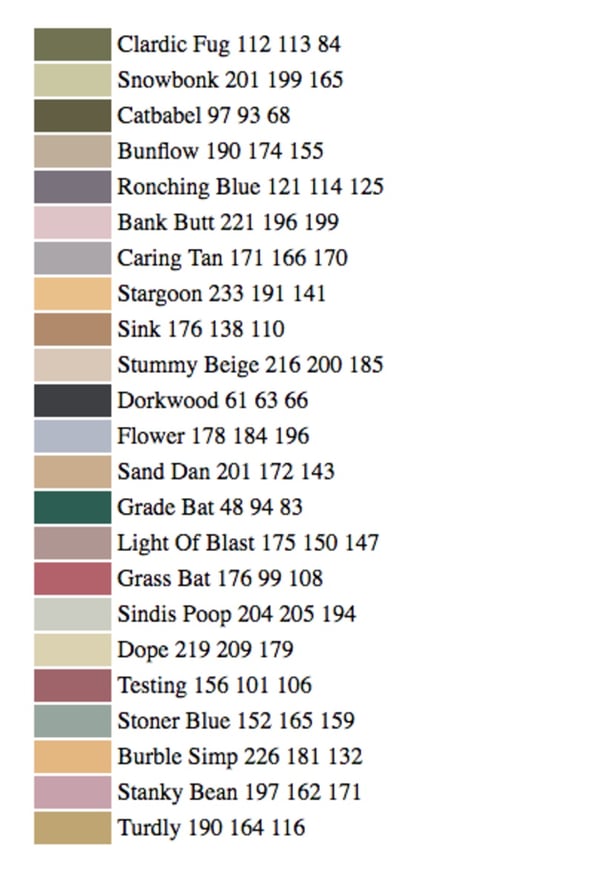

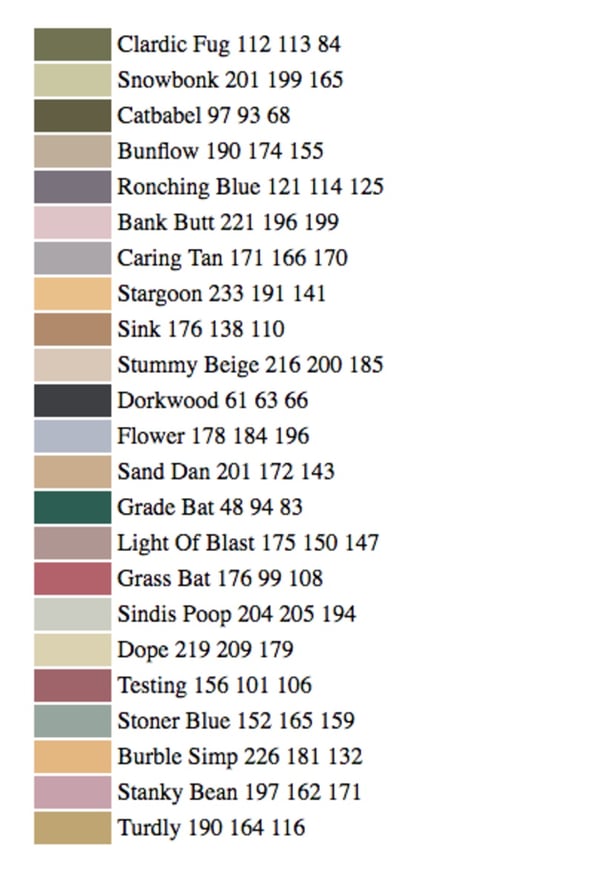

In this case, it bordered on the latter. When research scientist Janelle Shane trained a neural network to create new paint colors -- which are already so interestingly named, like my favorite, Benjamin Moore's "Custis Salmon" -- the results were ... different. The AI was designed to create new shades, and assign names to them, but the two were often mismatched.

Case in point:

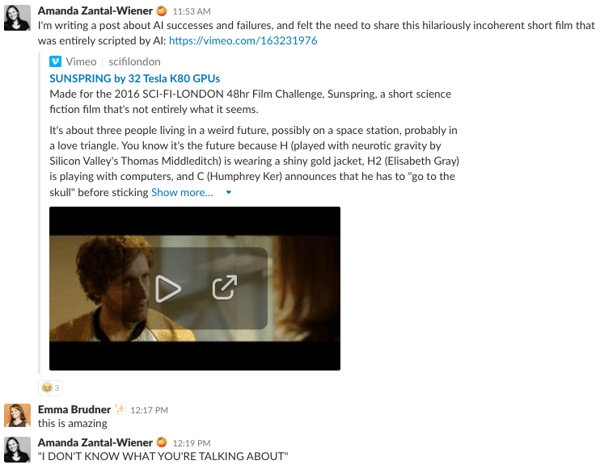

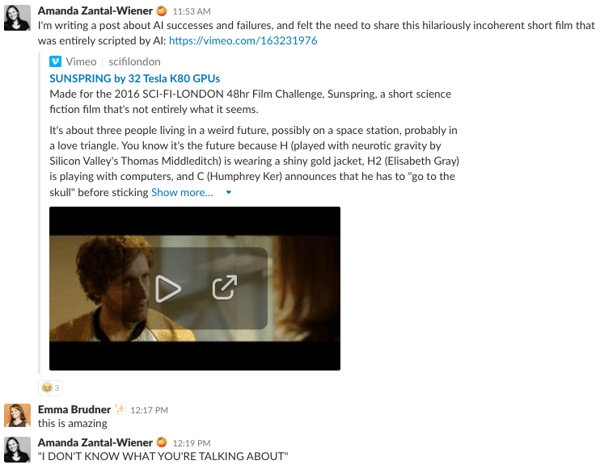

The results can be summarized by Sunspring, a short film that was acted and filmed entirely as the machine that wrote it intended. We'll let the work speak for itself ...

... as well as our team's response when it was shared over Slack:

The project began as a research-based side gig that was rooted in co-founder Felix Holst's background at Mattel, where he worked on Hot Wheels toy cars. First, he and co-founder Mouse McCoy built a team to create a high-performance race car, which was later driven by a human through the Mojave Desert. That's where the cool part comes in: The team was able to capture the driver's brainwaves. That, combined with information collected from sensors placed within the vehicle itself, was converted into data that served as a foundation for the machine learning that would create what Fast Company once referred to as the car's "nervous system."

Hack Rod has been noticeably absent from recent self-driving headlines, but there's little doubt that it paved the way -- if you'll excuse the pun -- for autonomous vehicles to come.

But we haven't come across as many examples of AI-powered musical composition that allows the user to create its own audible masterpiece. That's where The Infinite Drum Machine comes in: an AI experiment that uses machine learning to capture thousands of sounds we hear in day-to-day life, like a bag of potato chips opening or a file cabinet opening, and organizes them to create percussive patterns.

And thanks to Google's AI Experiments site, everyday tech nerds like us can play with it.

AutoDraw, another Google AI experiment, helps novice artists -- er, those who like to doodle on the internet -- create better quality images with the help of machine learning.

For example, here's what happened when I tried using it to draw a picture of my dachshund-mix dog:

Cute! After about 50 seconds, AutoDraw figured out that I may have been drawing a dachshund. But here's what happened when I tried to replicate its own work:

Granted, I'm not exactly a brilliant artist -- but I did find it interesting that AutoDraw couldn't recognize the subject of its own work being copied.

So, is AI capable of creativity? Sure. But in terms of its ability to completely replicate what a human mind might do -- write a screenplay, accurately create and name paint colors, or emulate a teenage girl without offending the masses -- it's not quite there yet.

Are we on our way? Probably. But in the meantime, we'll be preparing our popcorn and watching Sunspring on repeat.

Source

Is artificial intelligence capable of truly creative work?

As it turns out, there's no truly definitive answer. There have been cases when the response is, "Of course," and those where attempts to make an AI-powered presence creative fell completely flat.

So, we sought out to find instances of both. There are quite a few -- some amusing, and some horrendous. Here are seven of the best examples we could find.

Is AI Capable of Creativity? 4 Fails, and 3 Successes

The Fails

1) Microsoft: Tay

Oh, boy. Where to begin, with this one?Here's the thing about machine learning: Typically, if it works properly, it's designed to function based on its users. Sometimes, that can be a good thing, like when Spotify's algorithm uses your listening behavior to curate playlists of songs it thinks you might like.

But in the case of Tay.ai, a Twitter bot designed by Microsoft to function like any "normal" teenage girl using the social media channel, things went terribly wrong.

The problem is that, as "smart" as it might be, AI isn't quite capable of establishing its own ethics. Its only sense of right and wrong is what's dictated by its algorithm, and even then, machine learning AI is still generally informed by the humans it's determined to engage with. That's why Russian ads on Facebook, for example, may have been so effective during the 2016 U.S. presidential election -- based on users' behavior on Facebook (likes, follows, comments, clicks, and more), its algorithm is "informed" enough for that content to accurately reach the people it was meant to influence.

Such was the case, somewhat similarly, with Tay. As the story goes, Tay began to emulate the behavior -- or in this case, the language used in tweets -- of the users engaging with it. Unfortunately, that language was charged with anti-Semitic and anti-feminist sentiment, causing Tay to tweet out such offensive content that Microsoft had to take it offline.

Source: Quora

2) IBM: Chef Watson

I like to call this one "The Great Chocolate Burrito Incident of 2015." That same year, Watson -- the IBM AI robot that once beat a reigning Jeopardy champion -- became "Chef Watson" and released its first cookbook. Watson developed the recipes almost completely independently using its flavor algorithm, with only a bit of help from Institute of Culinary Education human chefs to make a tweak here and there.There was reason to be optimistic. Prior to the cookbook's publication, a few tech writers had been sent a complimentary bottle of BBQ that was also formulated by Watson, and earned positive reviews. But then came the Austrian Chocolate Burrito: a recipe with results that ranged from "a bunch of little balls of quasi-dryness in my mouth" to "so bad that I thought it had to be good."

(It wasn't all bad -- the Washington Post, evidently, had better luck with the same recipe.)

We have yet to test the recipes ourselves, but in the meantime, um ... bon appétit?

3) Research Scientist Janelle Shane: AI-Named Paint Colors

When someone pens an article about AI titled, "We’re Pretty Sure the Robot That Invented These Paint Colors Is a Stoner," our guess is that it's about an experiment that either went very well or very wrong.In this case, it bordered on the latter. When research scientist Janelle Shane trained a neural network to create new paint colors -- which are already so interestingly named, like my favorite, Benjamin Moore's "Custis Salmon" -- the results were ... different. The AI was designed to create new shades, and assign names to them, but the two were often mismatched.

Case in point:

So, maybe that last one on the list is, um,

fitting. But how does one explain the pinkish hue of "Grass Bat," or

the bluish-purple one of "Caring Tan?"

Maybe we just don't have the artistic eye

to appreciate these, but until we do, we'll go ahead and categorize this

one as "not quite a success."

4) Oscar Sharp and Ross Goodwin: Sunspring

Have you ever wondered what would happen if scripts from classics like Ghostbusters and the original Bladerunner were fed to a neural network for the purpose of creating an original, entirely AI-written screenplay?The results can be summarized by Sunspring, a short film that was acted and filmed entirely as the machine that wrote it intended. We'll let the work speak for itself ...

The Successes

5) Primordial Research Project: Hack Rod

Self-driving cars, are making many headlines these days and are even the stuff of high-profile lawsuits. But before the likes of Google, Lyft, and Uber began fighting over who would reign supreme in the area of autonomous vehicles, there was Hack Rod: one of the first artificially intelligent automobiles that had people talking.The project began as a research-based side gig that was rooted in co-founder Felix Holst's background at Mattel, where he worked on Hot Wheels toy cars. First, he and co-founder Mouse McCoy built a team to create a high-performance race car, which was later driven by a human through the Mojave Desert. That's where the cool part comes in: The team was able to capture the driver's brainwaves. That, combined with information collected from sensors placed within the vehicle itself, was converted into data that served as a foundation for the machine learning that would create what Fast Company once referred to as the car's "nervous system."

Hack Rod has been noticeably absent from recent self-driving headlines, but there's little doubt that it paved the way -- if you'll excuse the pun -- for autonomous vehicles to come.

6) Manny Tan & Kyle McDonald: The Infinite Drum Machine

We've heard cases of AI-created musical composition, like Emily Howell, the classical-music-composing machine created by UCSC Professor Emeritus David Cope.But we haven't come across as many examples of AI-powered musical composition that allows the user to create its own audible masterpiece. That's where The Infinite Drum Machine comes in: an AI experiment that uses machine learning to capture thousands of sounds we hear in day-to-day life, like a bag of potato chips opening or a file cabinet opening, and organizes them to create percussive patterns.

And thanks to Google's AI Experiments site, everyday tech nerds like us can play with it.

7) Google Creative Lab: AutoDraw

Okay, the jury might be out on this one. We're calling it a win, because it makes for a fun way to pass the time, say, while putting off one's blog-writing duties.AutoDraw, another Google AI experiment, helps novice artists -- er, those who like to doodle on the internet -- create better quality images with the help of machine learning.

For example, here's what happened when I tried using it to draw a picture of my dachshund-mix dog:

Cute! After about 50 seconds, AutoDraw figured out that I may have been drawing a dachshund. But here's what happened when I tried to replicate its own work:

Granted, I'm not exactly a brilliant artist -- but I did find it interesting that AutoDraw couldn't recognize the subject of its own work being copied.

So, is AI capable of creativity? Sure. But in terms of its ability to completely replicate what a human mind might do -- write a screenplay, accurately create and name paint colors, or emulate a teenage girl without offending the masses -- it's not quite there yet.

Are we on our way? Probably. But in the meantime, we'll be preparing our popcorn and watching Sunspring on repeat.

Source

COMMENTS